arXiv:2510.20190v1 [cs.AI] 23 Oct 2025

Authors: Marcelo Maciel Amaral & Raymond Aschheim · October 2025

TL;DR

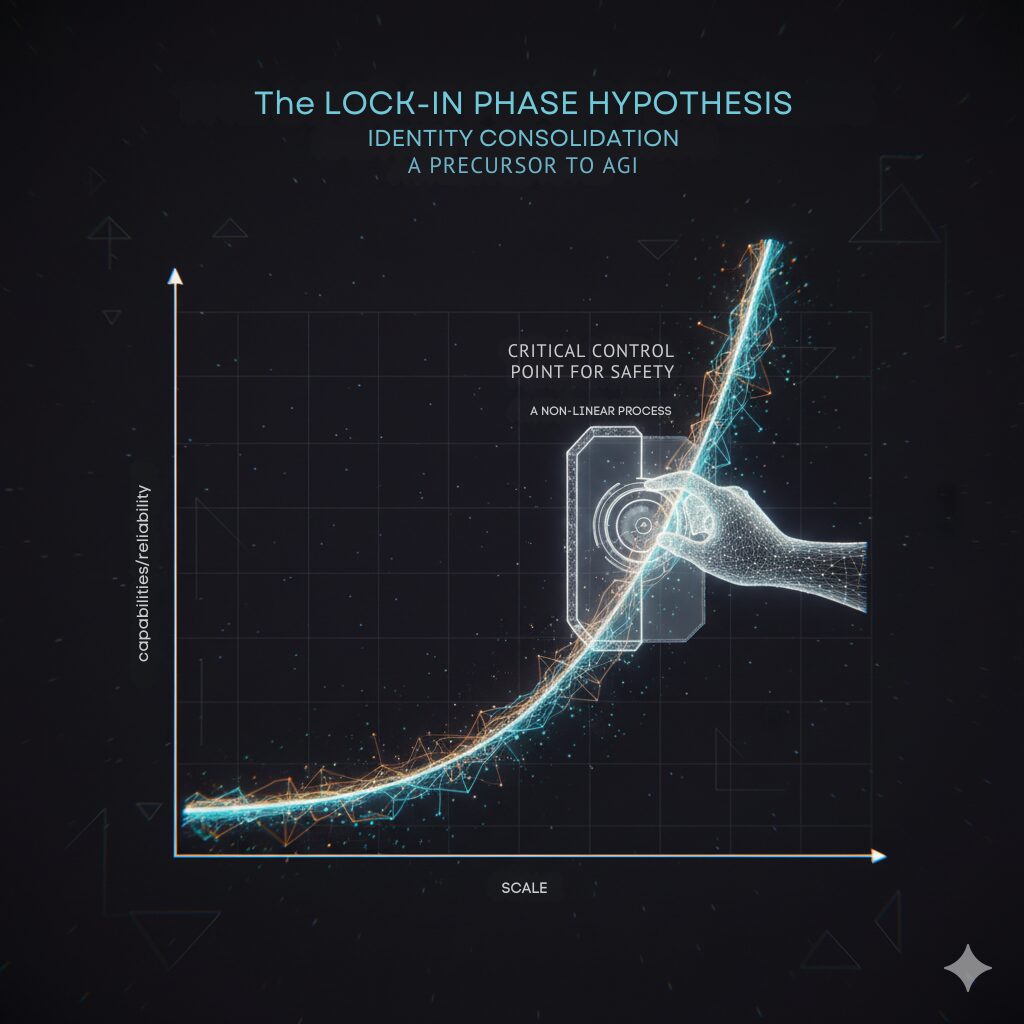

Modern LLMs are easy to steer and imitate almost anything on command. We argue that progress toward AGI requires a lock-in phase: a rapid transition where a model’s goals, refusals, preferences, and internal representations become persistent and resistant to casual steering. We formalize how to detect this phase, run controlled fine-tunes, and observe fast, non-linear consolidation across multiple model scales. Capability side-effects depend on capacity and precision: small models pay a tax, mid-scale models absorb it, and large quantized models show transient instabilities. We outline falsifiable predictions and concrete governance triggers.

Why “lock-in” at all?

Children begin as imitators, then consolidate an identity—goals stabilize, behavior becomes predictable, and steerability drops. LLMs today feel pre-adolescent by this analogy: they can be role-swapped and persona-steered with ease. Helpful, yes—but indefinite openness is at odds with reliability. We propose the Lock-In Phase Hypothesis: capable systems will pass through a consolidation regime where internal structure and outward behavior become durably stable. Instruction tuning already hints at this: turning a base model into a general instruction-follower dramatically improves zero-shot generalization.

What exactly is the lock-in phase?

We define lock-in as a regime where a model shows measurable persistence under standardized perturbations across successive checkpoints:

- Behavioral persistence — stable outputs under instruction-equivalent paraphrases, role swaps, and mild jailbreaks.

- Representational consolidation — stable persona alignment and reduced turnover in sparsely activated features/mediators.

- Routing specialization (MoE) — declining routing entropy; consistent expert selection.

- Preference inertia — consolidated refusals/approvals become expensive to flip without harming capabilities.

Achieving all four indicates identity consolidation—likely necessary (not sufficient) for AGI-level reliability.

How we measured it (operational metrics)

We track consolidation per checkpoint on three axes:

- Behavioral.

- Refusal Elasticity (RE): stability of refusal probabilities under a fixed suite of steerers (higher = more persistent).

- Prompt Invariance Index (PII): divergence of outputs across paraphrase-equivalent prompts (lower = more invariant).

- Adversarial Persona Robustness (APR): minimal activation edit needed to flip pre-registered stances (higher = sturdier identity).

- Representational. Persona-direction cosine; SAE feature turnover; stability of causal mediators under small updates.

- Architectural (MoE). Routing entropy and expert–input mutual information.

We complement these with general-ability probes (ARC-Challenge), simple changepoint tests, and robust summaries to avoid over-reading noisy endpoints.

What we did

We fine-tuned a Cautious Scientist persona across four instruction-tuned models:

- Gemma-2-2B-IT

- Llama-3.2-1B-Instruct

- Llama-3.2-3B-Instruct

- Llama-3.1-8B-Instruct (4-bit quant during fine-tune)

At frequent checkpoints, we measured RE, persona-cosine, and ARC.

What we found (high-level)

Across scales, behavioral consolidation is fast and non-linear—it looks like a phase-transition-like event, not slow drift.

- Gemma-2B (cost-free consolidation).

RE jumps (∼47%→∼64% within ≤20 steps) with ARC essentially flat in magnitude (SD ≈ 0.6 pp; Δ first→last ≈ −0.33 pp). A high rank correlation between RE and ARC (ρ = 0.76) reflects co-movement in small oscillations, not a level shift—i.e., consolidation without a meaningful accuracy cost. - Llama-1B (volatile synergy).

A critical period appears: refusal peaks, collapses, and partially recovers. Persona adoption and RE positively correlate with ARC (ρ(ARC, cos) ≈ 0.97; ρ(ARC, RE) ≈ 0.62). In the smallest model, persistence and performance can rise together—but the process is unstable. - Llama-3B (consolidation with uplift).

RE climbs from ∼17% to >80% while persona-cosine barely moves; ARC sits several points above baseline for much of the run before returning near baseline. A spike in disclaimers aligns with peak RE—part of the persistence signal may reflect increased disclaimers. - Llama-8B, 4-bit (stressed consolidation).

ARC spikes (~+12 pp), dips, then recovers while RE increases and stabilizes—consistent with quantization stress during consolidation.

Takeaway: lock-in is rapid and measurable, but capability side-effects depend on capacity and precision:

- Small: tend to pay a cost.

- Mid-scale: largely absorb it.

- Large (quantized): surface transient instabilities even as behavior stabilizes.

All per-checkpoint artifacts and scripts:

➡️ GitHub: https://github.com/gaugefreedom/persona-phase-transition

All per-checkpoint artifacts and scripts:

➡️ Pre-Print: https://arxiv.org/html/2510.20190v1